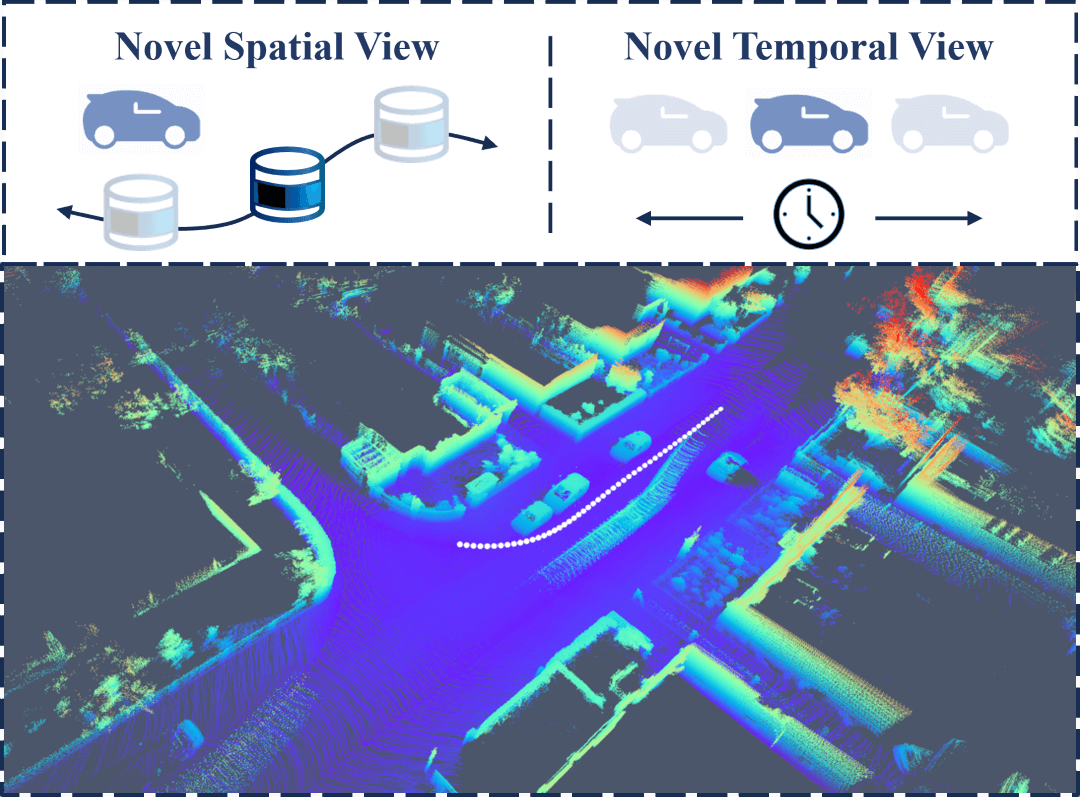

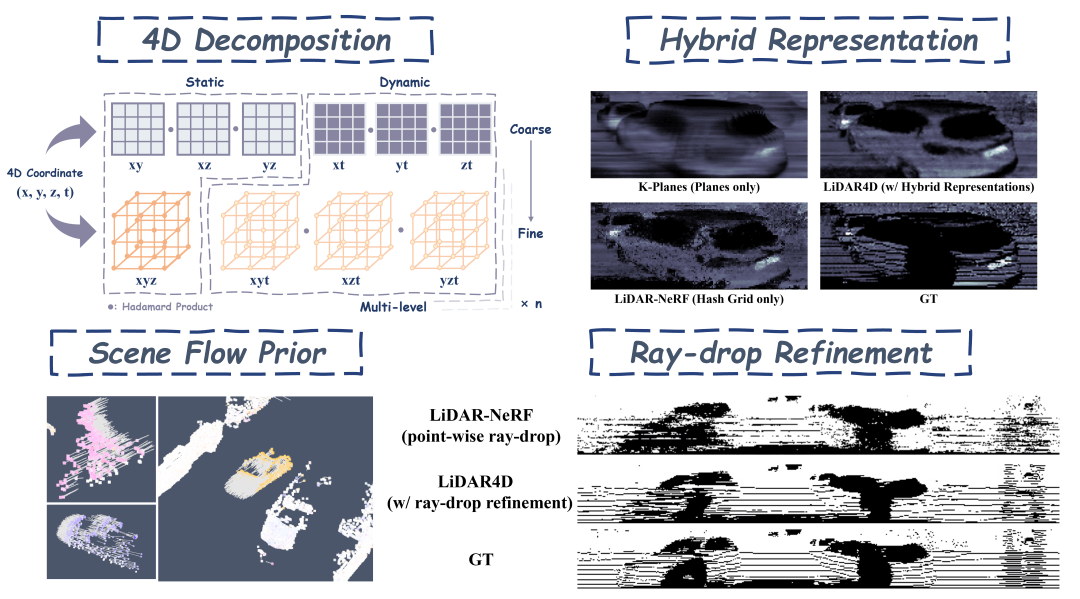

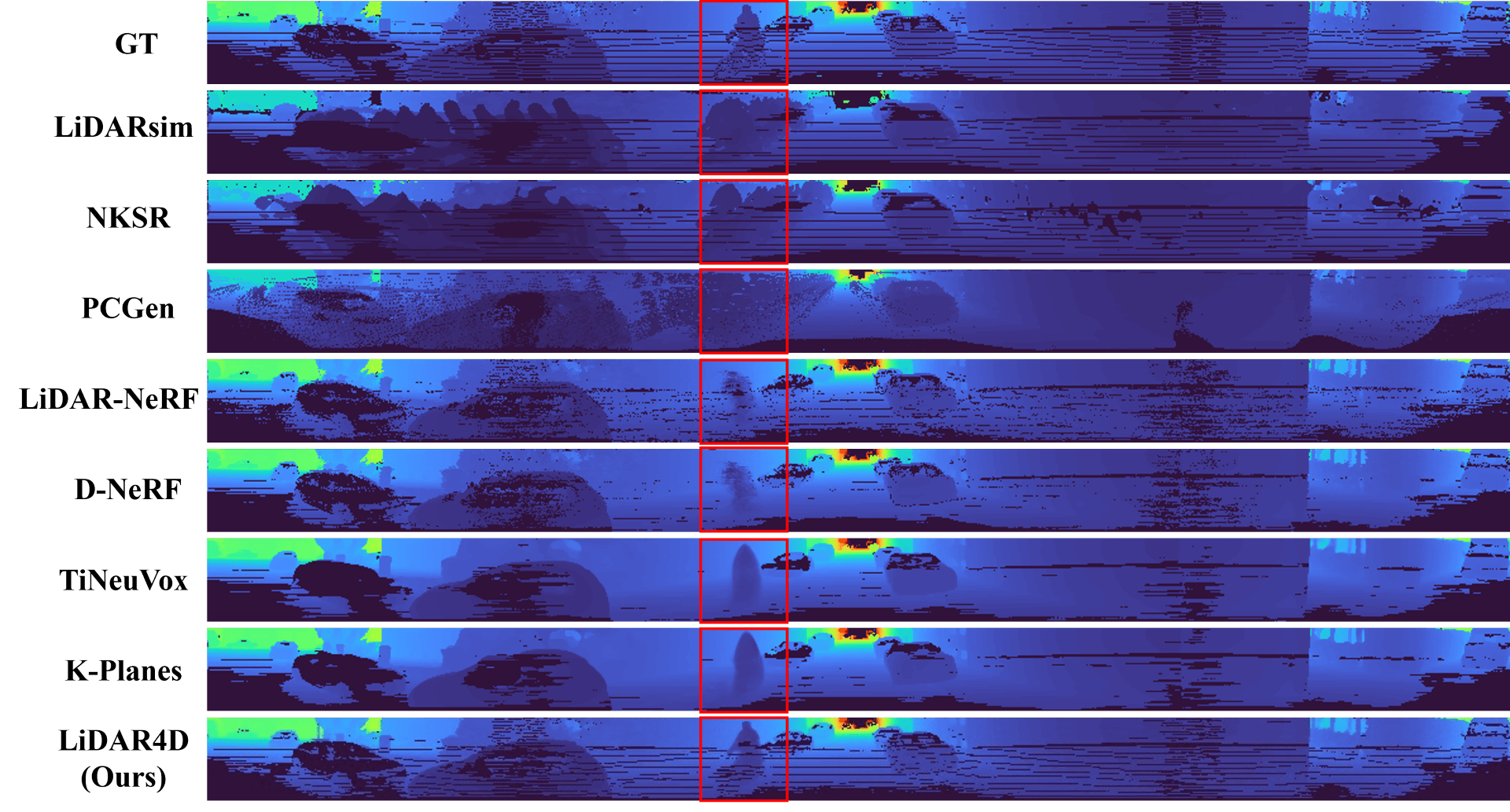

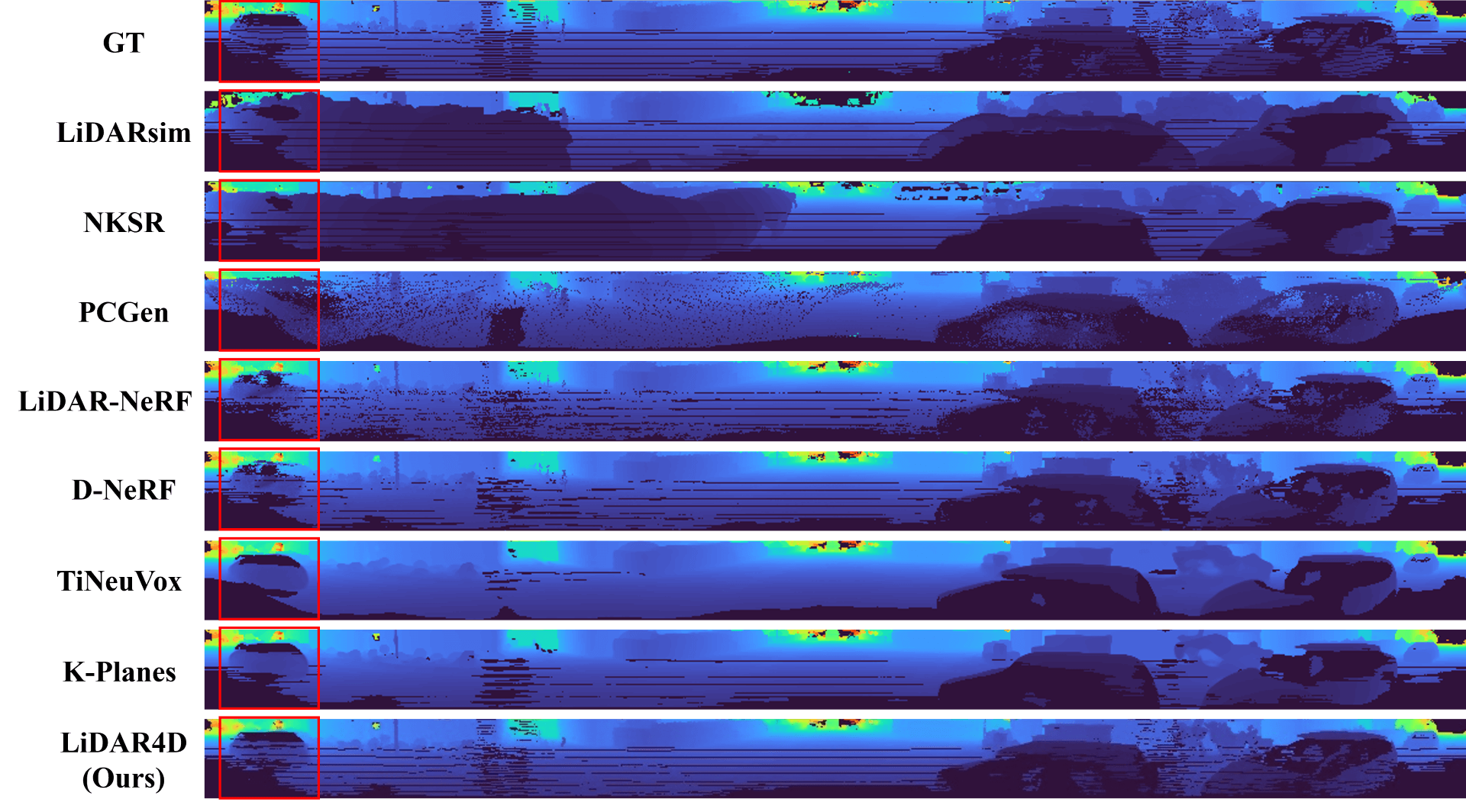

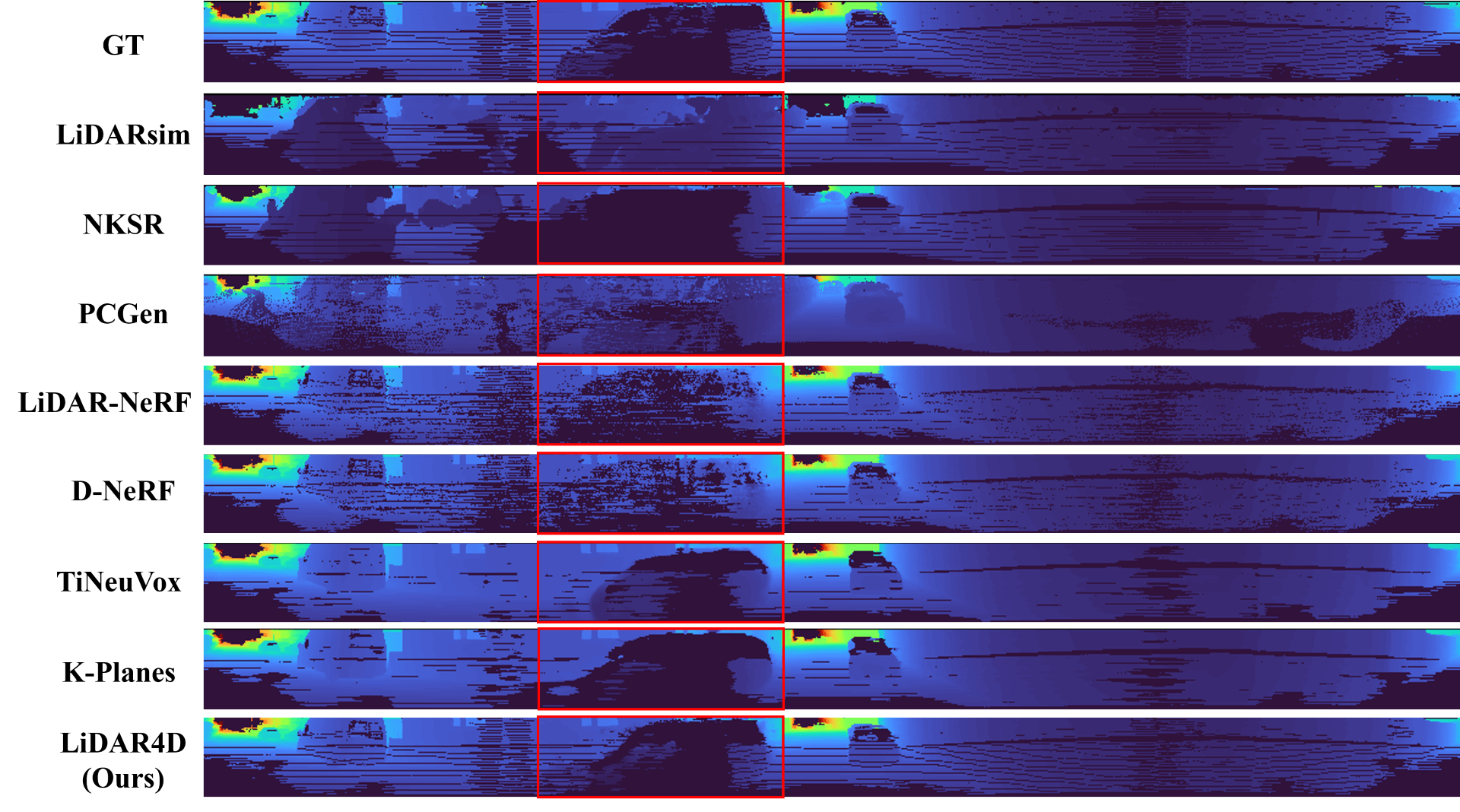

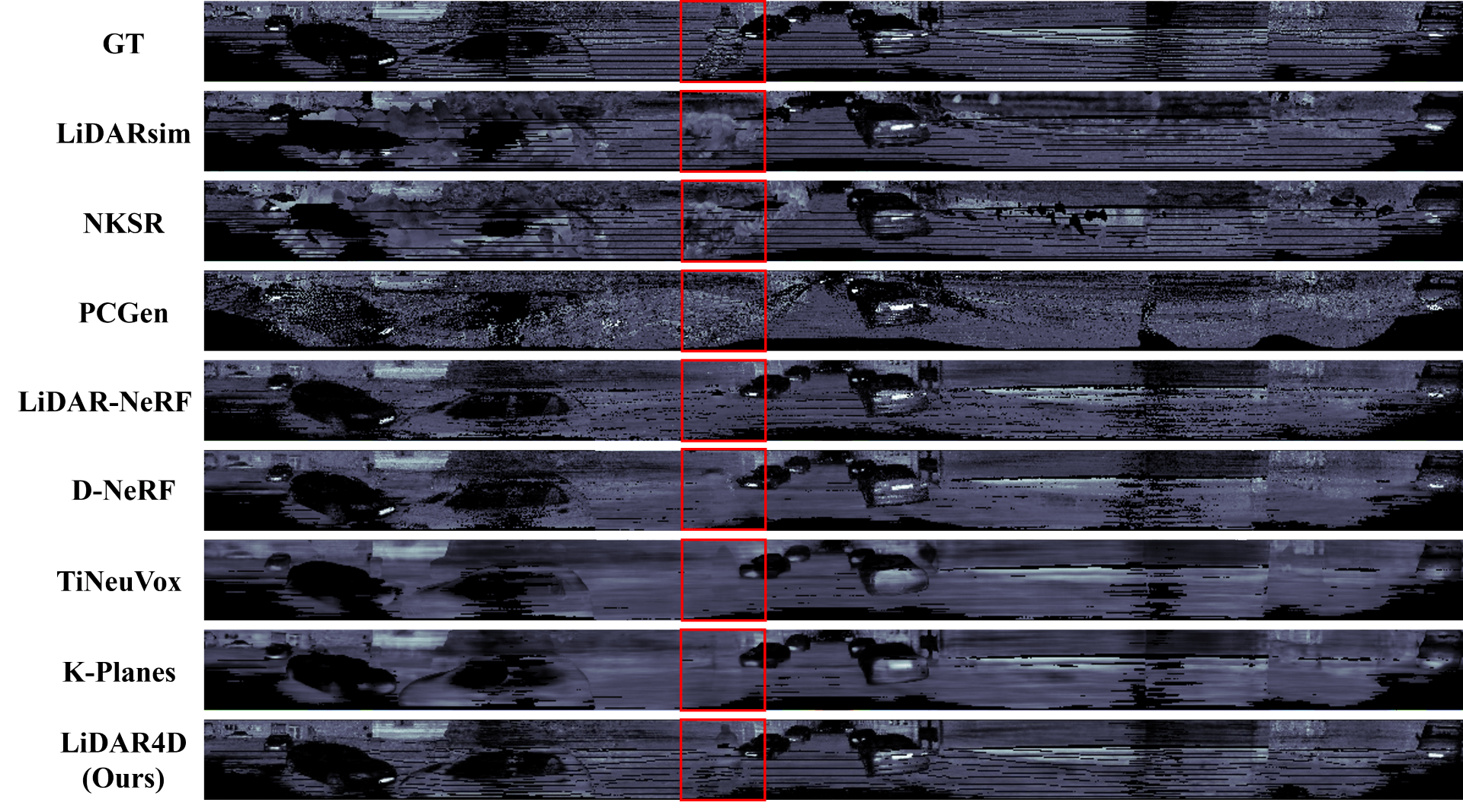

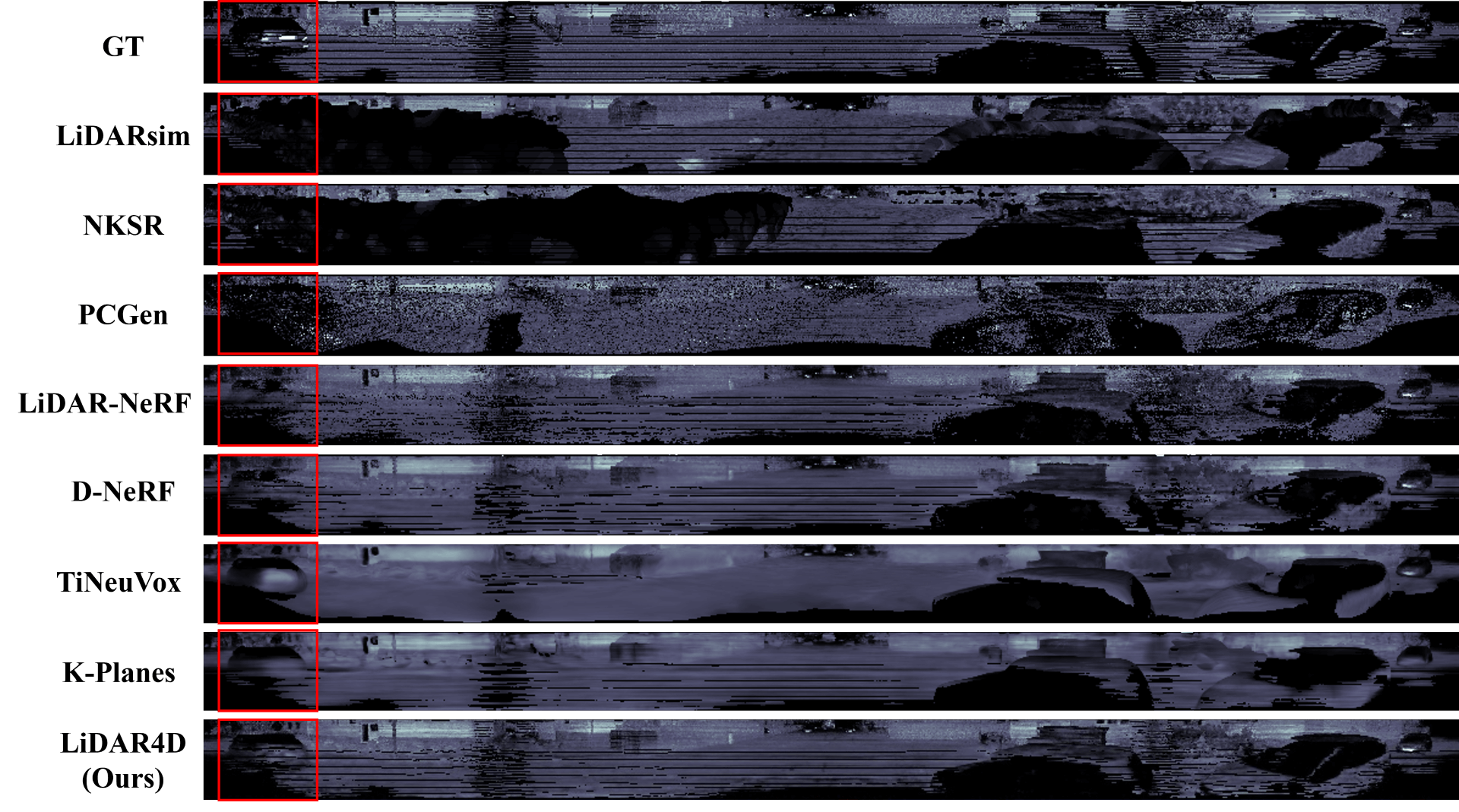

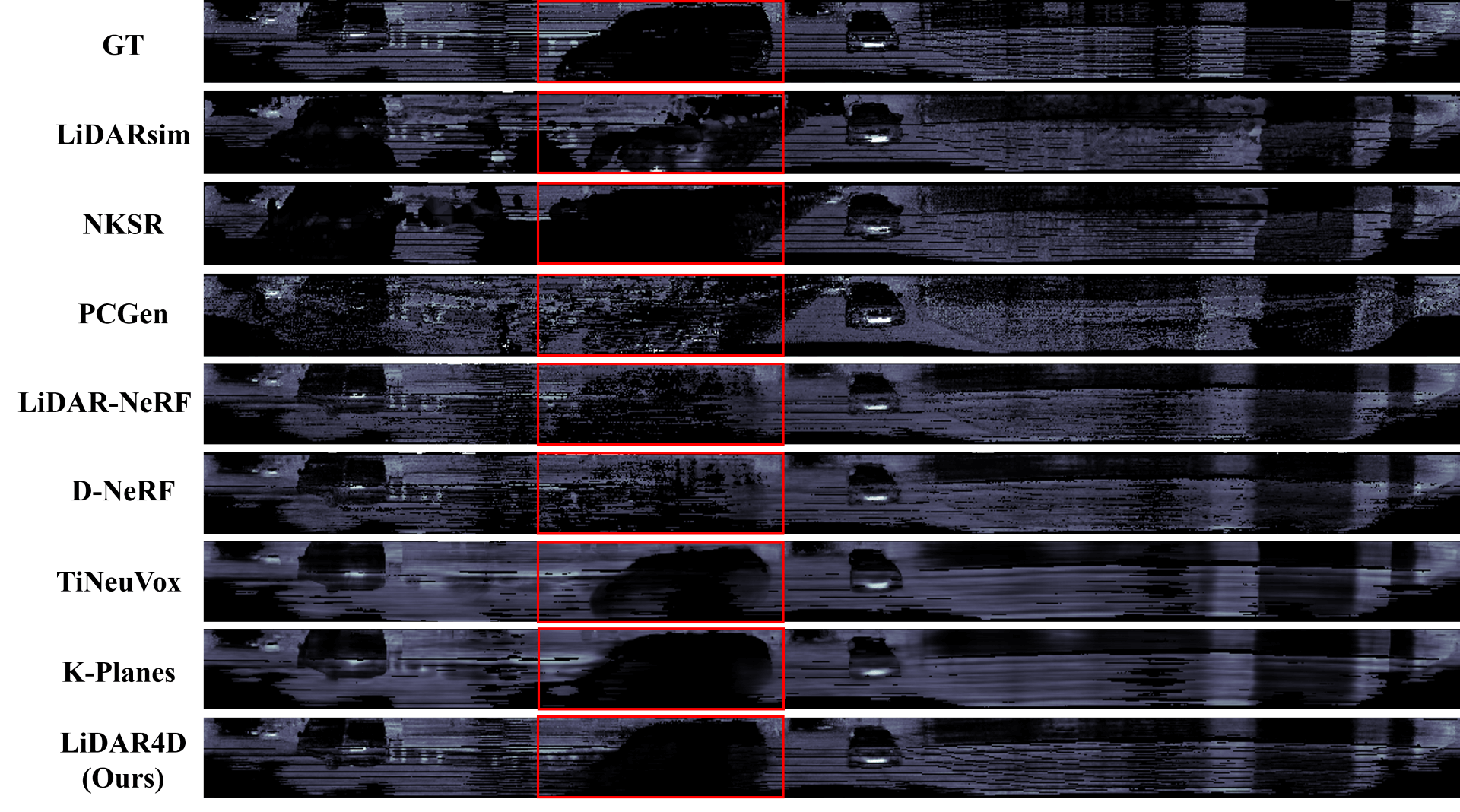

Although neural radiance fields (NeRFs) have achieved triumphs in image novel view synthesis (NVS), LiDAR NVS remains largely unexplored. Previous LiDAR NVS methods employ a simple shift from image NVS methods while ignoring the dynamic nature and the large-scale reconstruction problem of LiDAR point clouds. In light of this, we propose LiDAR4D, a differentiable LiDAR-only framework for novel space-time LiDAR view synthesis. In consideration of the sparsity and large-scale characteristics, we design a 4D hybrid representation combined with multi-planar and grid features to achieve effective reconstruction in a coarse-to-fine manner. Furthermore, we introduce geometric constraints derived from point clouds to improve temporal consistency. For the realistic synthesis of LiDAR point clouds, we incorporate the global optimization of ray-drop probability to preserve cross-region patterns. Extensive experiments on KITTI-360 and NuScenes datasets demonstrate the superiority of our method in accomplishing geometry-aware and time-consistent dynamic reconstruction.

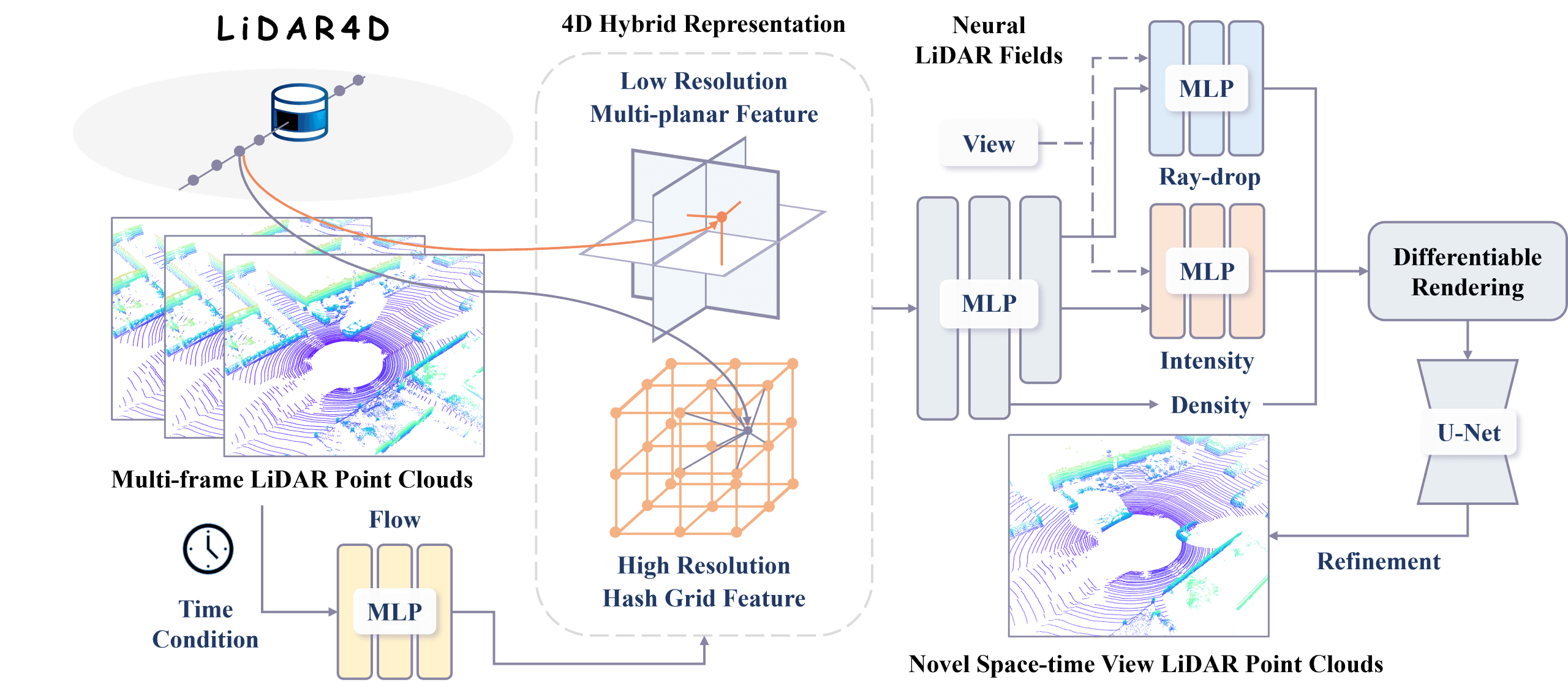

For large-scale autonomous driving scenarios, we utilize the 4D hybrid representation, which combines low-resolution multi-planar features and high-resolution hash grid features to achieve effective reconstruction. Then, multi-level spatio-temporal features aggregated by flow MLP are fed into neural LiDAR fields for density, intensity and ray-drop probability prediction. Finally, novel space-time view LiDAR point clouds are synthesized via differentiable rendering. Furthermore, we construct geometric constraints derived from point clouds for temporal consistency and the global optimization of ray-drop for generation realism.

@inproceedings{zheng2024lidar4d,

title = {LiDAR4D: Dynamic Neural Fields for Novel Space-time View LiDAR Synthesis},

author = {Zheng, Zehan and Lu, Fan and Xue, Weiyi and Chen, Guang and Jiang, Changjun},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024}

}